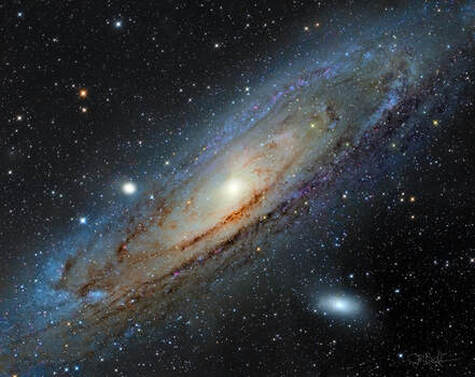

Arguably the brightest and most magnificent object outside of our own solar system, the Orion Nebula is easily visible as a naked eye object, even in light polluted skies. Shown here with the "Running Man" Nebula, NGC 1977, the views through a small telescope or binoculars are outstanding, and it just gets better in larger aperture scopes. The bright center portion of the nebulae features four stars of similar magnitude known as the "trapezium." It's the heart of a massive complex of young stars being formed from the gases and dust which gives the nebula it's shape. It is truly a stellar nursery.

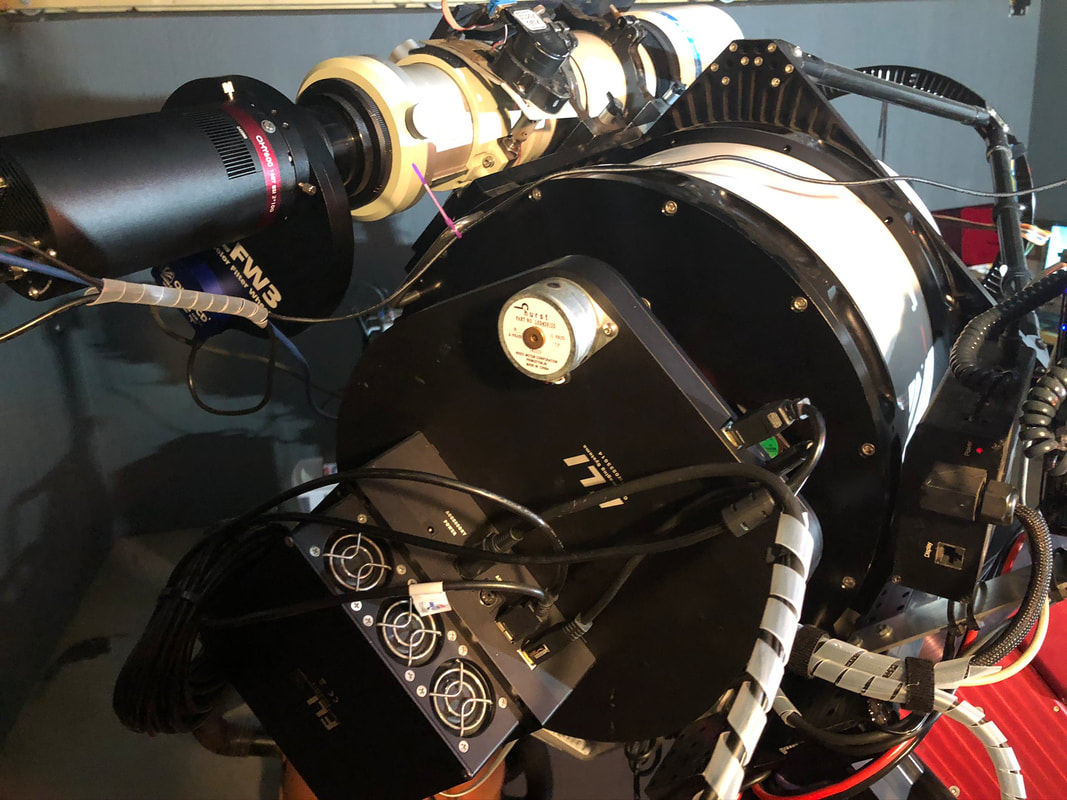

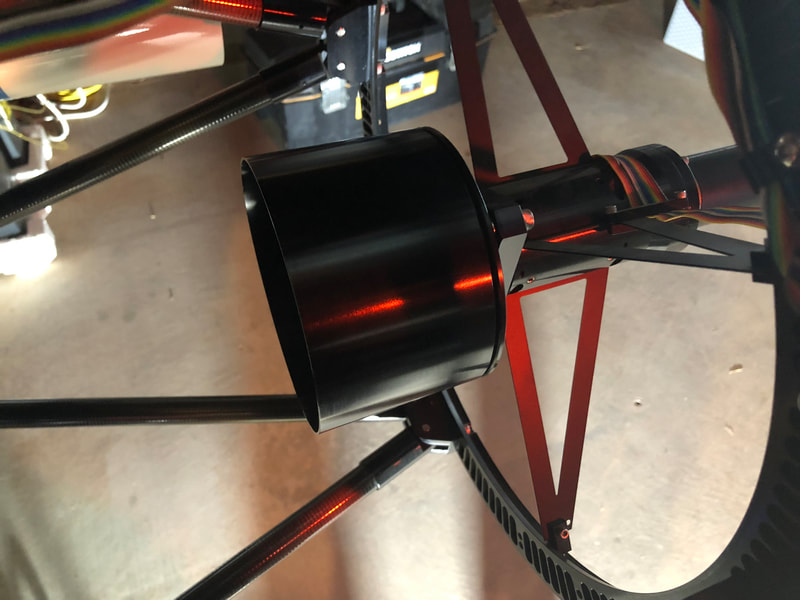

I took this image during the first couple of weeks in December, 2019. It took nearly 17 hours of total exposure time to collect enough light through my small, high quality 4” refractor. While the image doesn’t have to take that long, it becomes necessary in order to reveal cleanly much of the faint dust and gases within the entire ~4 arc-degrees field of view. Our full moon is a half-degree from our perspective; and so, the nebula itself is not all that small and it demonstrates that we use telescopes primarily to collect more light, not necessarily to “zoom in on stuff.”

But I often get the question where the colors come from in my photos; after all, color doesn’t typically appear when viewing through a telescope. Because of this, many feel that it must be fake, since if your eyes don’t see it, it can’t be real, right?

Well, interestingly, this image of Orion Nebula can also help us with a deeper understanding of Biology. Go figure!

The Orion Nebula is unique in a very special way…it’s one of the few deep sky objects that can show color visually through a telescope. People who can see this color will report a shade of blue or green, where our eyes are most sensitive. The amount and quality of light required to trigger such “color vision” depends on the person. And this is true about light in general…we all need a minimum amount of it before we can begin to detect the world around us. We talk about this amount in terms of luminance, which is the intensity of the light per unit area. These luminance values are collected by both the "rods" and the "cones" in our eyes.

The highly-sensitive rods - there are 20 times as many rods than cones in our eyes - detect light in shades of gray. In low light situations, the rods are triggered first. We accept this fact the moment we put on our red shirts in the morning only to realize 15 minutes later that we are wearing green. Truly, some people are better than others with color detection in the dark, but why do I feel that I might be the only person to ever put on the wrong shirt by mistake?

Because we are creatures of biology, slowing wearing out as we age, the elderly among us will require a higher threshold of light before vision occurs. As such, in low light conditions, your grand-kids will see you first. But before either of us can see in glorious color, the color sensors (cones) will need to be activated by a stronger source of light. Once enough of it hits the eyes, three types of cones collect light in three broad bands of red, green, and blue (RGB). Once enough light from these bands is sensed, the brain mixes these spectra into specific colors or hues.

The interaction between rods and cones is interesting. Once the cones activate, the need for rods diminishes. Our color perception and intensity of light (luminance) becomes almost entirely the result of cone activation during the daytime. There is an overlap between the rods and cones during periods when it's "sorta dark" outside. Think about those nights shortly after sunset when you can still perceive color in some of the things around you. As the cones become more ineffective - the colors disappear - the rods take up the heavier workload. This overlap is obvious when you consider that as color fades from our vision, it doesn't SNAP from color to grayscale immediately.

Overall, our color-sensing cones can detect wavelengths within a spectrum of approximately 400nm to 700mm, from blue to red respectively, though at the extremes of that range, light is barely detectable. So in practice, what we see as “true color” will comprise information mostly in the 450nm to 650nm range.

There are a couple of takeaways to be made from these facts.

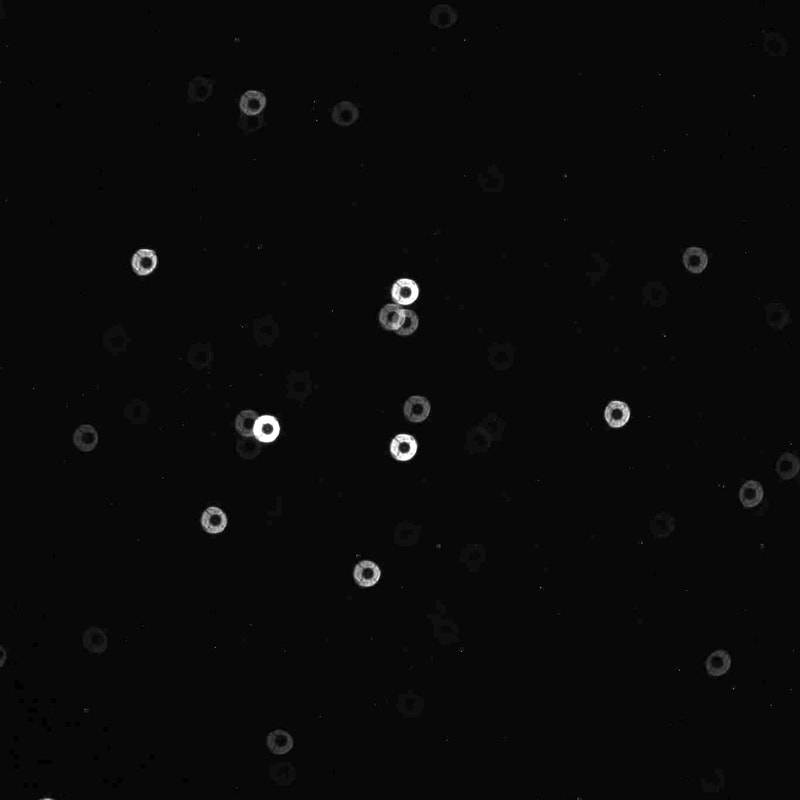

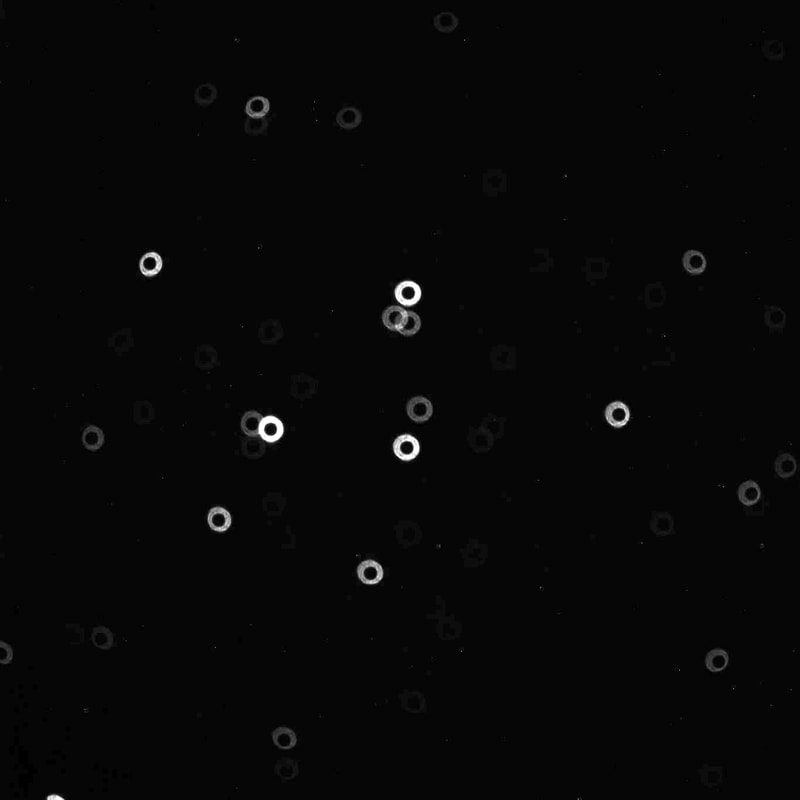

First, so much of the light coming from a target like the Orion Nebula is actually outside of our ability to see it with our eyes. For example, the principle “alpha” line of hydrogen emissions glows (or ionized) at 656.3nm, which can be somewhat imperceptible to many people visually since it's slightly outside that 650nm effective visual spectrum. Making matters worse, because our black & white-sensing rods are the major workhorses when doing low-light astronomy, they actually have worse spectral capability than our cones. I’ll spare the reader of a discussion of “scotopic" vs. "photopic" vision and the spectral curves that show how rods and cones compare in this regard, but doing so would show that hydrogen rich targets like emission nebulae have a lower chance of visual detection than other objects in these light-starved environments. In other words, not only do the color-sensing cones remain inactive during visual observing, our rods lack the ability to catch the illumination from sources of light that glow in the upper reddish regions of our vision.

The second key point to be made is that light waves exist far outside our eyes’ capabilities of seeing them. A camera with a silicon sensor (CCD or CMOS) can see a great many wavelengths that we cannot, including UV frequencies down to below 200nm and near-IR frequencies higher than 1100nm.

In fact, an astronomy camera does not have ANY biological limitation, neither in the quality or quantity of light that it can record. This fact can be leveraged fully by taking additional data with a special filter that ONLY passes or “sees” the glowing hydrogen. This is why I enhanced this image with hydrogen-alpha data. It provides details to the nebula that most eyes see poorly, no matter how large the telescope. So when you see astrophotos advertised to have an “Ha” component, it simply means they’ve added something real to the image that you didn’t know was there.

And isn’t that the magic of astronomy, where our telescopes and cameras can reveal the mystery of the cosmos, going far beyond what our biology can accomplish by itself? Moreover, doesn’t that say something about what we often regard as “real” vs. “unreal”? Philosophically, just because you can’t see it or lack the tools to measure it, it doesn’t mean that it doesn’t exist, right?

Amusingly to me, Webster’s defines light thusly…

"light" - /līt/ - noun

1. The natural agent that stimulates sight and makes things visible. Ex..."the light of the sun".

After our conversation, this definition of light begs the question, "So if light is what stimulates sight and makes things visible, then can we properly call those wavelengths outside our human vision light?" After all, if you can’t see it, is it truly “light” by definition? As such, "light" is very much a human construct, the amount and quality of it being highly individual among us all.

But I guess that’s better than calling it electromagnetic radiation!

Hope you enjoyed the image…

RSS Feed

RSS Feed